What Is The Difference Between High Level And Low-Level Test Case?

High-level test cases cover the core functionality of a product like standard business flows. Low-level test cases are those related to user interface (UI) in the application.

High Level Test cases vs Low level Test Cases

Typically early in a project a decision has to be made, write high level (abstract) or low level (detailed) test cases. Academic test theory tells us that your level of detail depends on the requirements. Well understood requirements results in the use of high level test cases and poorly understood requirements results in the use of low-level test cases (i.e. highly detailed test cases). The theory being that you must write detailed test cases to illicit the requirements when they are not understood or don’t exist in the case of a legacy application.

This theory needs to be challenged, as it can result in significant testing effort and can result in a huge amount of “testing debt”. It is normal for test teams to be supportive of the shift left approach to test, in particular to provide early feedback on the analysis of requirements. Workshops, interviews and similar approaches are effective approaches to the analysis and capturing of requirements. Test teams should not be supportive of inefficient approaches to testing. In this instance writing detailed tests cases to in an attempt to solve the problem of poorly documented requirements or requirements that are simply not understood.

Two Possible Approaches

Let me detail two possible approaches to support this argument.

Approach 1) Low level test cases

- It is deemed the requirements are not well understood or poorly documented.

- Low level (detailed) test cases will be written to help develop the requirements.

- A large team of testers will be sourced for the activity. Typically on short term contracts and often the majority of the team are junior.

- Test teams spend significant effort on writing detailed test cases. Often this will help challenge the requirements and may improve the overall understanding of the requirements.

- The activity of creating and reviewing of the scripts may not allow time for other important test activities

- Software is delivered to the test team.

- But the detailed test cases still do not match the system under test. Thus a lot of effort is spent updating the detailed test cases either before or during execution.

- Test case execution takes significant effort due to execution.

- The updating and execution of the test cases takes up the available test cycle time and allows no time to check outside the test cases e.g. exploratory testing.

- The testers gain experience but only develop a narrow range of competencies.

- The product is delivered to the customer and most-likely many use cases have been missed.

- The customer is unhappy and wants changes to the product (re-work).

- Although as software has now been delivered the test team have been downsized.

- Knowledge gained to date is lost

- The Product Manager states some features need updating and the test cases need updating.

- The few remaining testers voice their concerns that updating the large volume of detailed test cases is too much for the reduced team.

- Ultimately the must find a solution to this problem of large testing debt with few resources.

- Often the team will create a regression pack of high level test cases that a smaller team can manage effectively.

Approach 2) High level test cases

- It is deemed the requirements are not well known or poorly documented.

- It is decided to organise a workshop and invite people from outside the team.

- A list of key scenarios is written up on a white board.

- Everyone gives their feedback on the key scenarios and further scenarios are considered.

- The team feel that they have a good understanding of the key scenarios and that everyone is on the same page.

- High level test cases are written to match the scenarios from the workshop.

- Test teams spend minimal effort creating and reviewing the test cases.

- As there is now no need to write high volumes of low level test cases, the test team does not need to be as large as traditional scripted projects.

- A small balanced test team is sourced with varying levels of experience and backgrounds; typically the more experienced testers will develop the less experienced testers.

- The test team can focus on adding value.

- They review the test cases and updated based on feedback received.

- They ask the friendly developers to add boundary value analysis and other related tests to the unit tests.

- They participate in code reviews.

- The testers pair up with the developers to test together.

- The focus of testing is on the scenarios and exploratory testing.

- The unscripted exploratory testing compliments the scripted tests.

- As issues are encountered, they are fixed immediately.

- Non-functional requirements such as security and performance are considered. Someone notes that in future that the non-functional requirements should be considered in future workshops.

- Software is delivered to the test team

- Testers perform checking, simply ensuring that the scenarios from the workshop have been implemented correctly.

- The updating and execution of the test cases takes up only a percentage of the test cycle time and allows the team perform exploratory testing and other valuable test activities.

- The team hold a bug bash (“pound on the product”) and invite people from outside the team. Valuable insights are gained and the product is iterated.

- The team present a product demo to the customer prior to beta. Further valuable insights are gained and the product is iterated.

- The testers gain experience and develop a wide range of competencies.

- The product is delivered to the customer

- The customer is happy, in fact so happy that they can’t get enough of this awesome software and wants more features.

- The small test team are retained after the release of software

- Knowledge is retained within the team.

- The Project Manager states we are going to add some new features and this may involve updating some features and the test cases need updating.

- It is decided that the workshop and early testing were such as success that this will be the “new way of working”.

- After conducting the workshop, the high level test cases are quickly updated and new high level test cases are written.

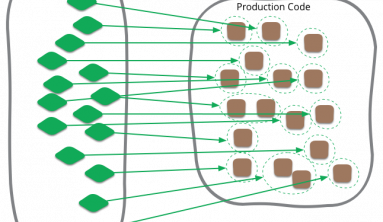

- It is decided that from now on the developers and testers will pair on automation and create an automation suite of high level test cases.

Summary

In summary I believe it is rarely optimal for test teams to write high volumes of low value test cases.

- Workshops are a great way of figuring out the important scenarios.

- Testers can then focus on adding value through progressive test approaches such as shift left, explorative testing and creating test automation.

- Testers are more engaged, utilizing their key strengths, develop a wider range of competencies and find their work enjoyable.

Products delivered are of a higher quality and provide greater business value.